Analysis on upload

Last updated: Nov-06-2023

When uploading assets to your Cloudinary product environment, you can request different types of analysis to be performed on the assets. In addition to the functionality detailed below, Cloudinary has a number of add-ons that enable various types of analysis.

Image quality analysis

You may want to determine the quality of images being uploaded to your product environment, particularly for user generated content. If you set the quality_analysis parameter to true when uploading an image, a quality analysis focus score between 0.0 and 1.0 is returned indicating the quality of focus in the image. A score of 0.0 means the image is blurry and out of focus and 1.0 means the image is sharp and in focus.

Sample response:

Extended quality analysis

A more detailed quality analysis of uploaded images is available through extended quality analysis.

With extended quality analysis activated, the response to an upload or explicit request with quality_analysis set to true includes scores for different quality factors in addition to the normally available focus related score. An overall weighted quality score is also included:

- Scores vary from 0.0 (worst) to 1.0 (best).

- For multi-page images, only the first page is evaluated.

-

jpeg_*scores are only present for JPEG originals. - JPEG quality is considered best (1.0) if chroma is present for all pixels (no chroma subsampling).

- Estimation of compression artifacts (DCT, blockiness and chroma subsampling) is not done for images above 10 MP

- Resolution gives preference to HD (1920 x 1080) and higher.

-

pixel_scoreis a measure of how the image looks in terms of compression artifacts (blockiness, ringing etc.) and in terms of capture quality (focus and sensor noise). Downscaling can improve the perceived visual quality of an image with a poor pixel score. -

color_scoretakes into account factors such as exposure, white balance, contrast and saturation. Downscaling makes no difference to the perceived color quality.

The quality_score, quality_analysis.color_score and quality_analysis.pixel_score fields can be used in the search method when quality_analysis is set to true in the upload request or an upload preset. Note that these scores are not indexed for search when returned as part of an explicit request.

Accessibility analysis

Analyzing your images for accessibility can help you to choose the best images for people with color blindness.

The response to an upload or explicit request with accessibility_analysis set to true includes scores for different accessibility factors.

Sample response:

-

distinct_edgesis a score between 0 and 1. A low score indicates that there are parts of the image that may be camouflaged to people with color blind conditions. For example, in this image, the grasshopper appears camouflaged to people with the color blind condition, deuteranopia:

-

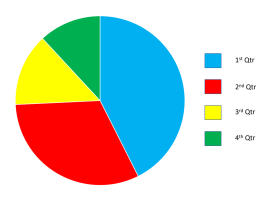

distinct_colorsis a score between 0 and 1. A low score indicates that there are colors that color blind people would find hard to differentiate between. In this example, the red and green slices of the pie chart appear the same to someone with deuteranopia:

-

most_indistinct_pairshows the two colors (in RGB hex format) that color blind people would find the hardest to tell apart. -

colorblind_accessibility_scoreis an overall score, taking each of the components into account.

The accessibility_analysis.colorblind_accessibility_score field can be used in the search method when accessibility_analysis is set to true in the upload request or an upload preset. Note that this score is not indexed for search when returned as part of an explicit request.

Semantic data extraction

When you upload an asset to Cloudinary, the upload API response includes basic information about the uploaded image such as width, height, number of bytes, format, and more. By default, the following semantic data is automatically included in the upload response as well: ETag, any face or custom coordinates, and the number of pages (or layers) in "paged" files.

Cloudinary supports extracting additional semantic data from the uploaded image such as: media metadata (including Exif, IPTC, XMP and GPS), color histogram, predominant colors, custom coordinates, pHash image fingerprint, ETag, and face and/or text coordinates. You request the inclusion of this additional information in the upload response by including one or more optional parameters.

The following example uploads an image and requests the pHash value, a color analysis, the media metadata and quality analysis:

The following is an example of a JSON response returned with the following additional information: media_metadata, colors, phash and quality_analysis:

You can ask Cloudinary for semantic data either during the upload API call for newly uploaded images as shown above, or using our Admin API for previously uploaded images (see Cloudinary's Admin API documentation for more details).

See the blog post on API for extracting semantic image data - colors, faces, Exif data and more for more information on semantic data extraction.

Moderation of uploaded assets

It's sometimes important to moderate assets uploaded to Cloudinary: you might want to keep out inappropriate or offensive content, reject assets that do not answer your website's needs (e.g., making sure there are visible faces in profile images), or make sure that photos are of high enough quality before making them available on your website. Whether using the server-side upload API call, or when allowing users to upload the assets directly from their browser, you can mark an asset for moderation by adding the moderation parameter to the upload call. The parameter can be set to:

-

manualfor hands-on moderation of any asset by your Cloudinary moderators -

perception_pointfor automatic moderation with the Perception Point Malware Detection add-on -

webpurifyfor automatic image moderation with the WebPurify's Image Moderation add-on -

aws_rekfor automatic image moderation with the Amazon Rekognition AI Moderation add-on -

google_video_moderationfor automatic video moderation with the Google AI Video Moderation add-on -

duplicate:<threshold>for automatic duplicate image detection with the Cloudinary Duplicate Image Detection add-on

For example, to mark an image for manual moderation:

Multiple moderations

You can mark an asset for more than one moderation in a single upload call. This might be useful if you want to reject an asset based on more than one criteria, for example, if it's either a duplicate or has inappropriate content. In that case, you might moderate the asset using the Amazon Rekognition AI Moderation as well as the Cloudinary Duplicate Image Detection add-ons.

An asset has a separate status for each moderation it's marked for:

-

queued: The asset is waiting for the moderation to be applied while preceding moderations are being applied first. -

pending: The asset is in the process of being moderated but an outcome hasn't been reached yet. -

approvedorrejected: The possible outcomes of the moderation. -

aborted: The asset has been rejected by another moderations that was applied to it. As a result, the final status for the asset isrejected, and this moderation won't be applied.

To use more than one moderation, set the moderation parameter to a string consisting of a pipe-separated list of the moderation types you want to apply. The order of your list dictates the order in which the moderations are applied. If included, manual moderation must be last.

For the first moderation in the list, the status of the asset is set to pending immediately on upload, and for all the other moderations requested, the status is set to queued. If the first moderation is approved, the next moderation is started and its status is set to pending.

This process continues until either the asset is rejected by a moderation, in which case any moderations still queued are now aborted and the final moderation status is set to rejected, or until all of the moderations are applied and approved. In that case, the final moderation status of the asset is approved.

For example, to mark an image for moderation by the Amazon Rekognition AI Moderation add-on and the Cloudinary Duplicate Image Detection add-on, and to request notifications when the moderations are completed:

Notification response

When you mark an asset for multiple moderations and request notifications, messages will be sent to inform you of the outcome (approved or rejected) of each individual moderation when it resolves. In addition, a summary will be sent at the end of the moderation process informing you of the final outcome and the status of each individual moderation (pending, aborted, approved or rejected).

If the asset was marked for manual moderation: The summary with the asset's final status will only be sent once the user has manually approved or rejected the asset. Even if the asset is rejected by one of the automatic moderations, the asset status will remain pending until the manual moderation is complete.

If the asset wasn't marked for manual moderation: The summary will be sent either when the status of any one of the moderations changes to

rejected(making the final statusrejected), or as soon as the status of every one of the moderations isapproved(making the final statusapproved).

Moderating assets manually

You can manually accept or reject assets that are uploaded to Cloudinary and marked for manual moderation.

As some automatic moderations are based on a decision made by an advanced algorithm, in some cases you may want to manually override the moderation decision and either approve a previously rejected image or reject an approved one. You can manually override any decisions made automatically at any point in the moderation process. Afterwards, the asset will be considered manually moderated, regardless of whether the asset was originally marked for manual moderation.

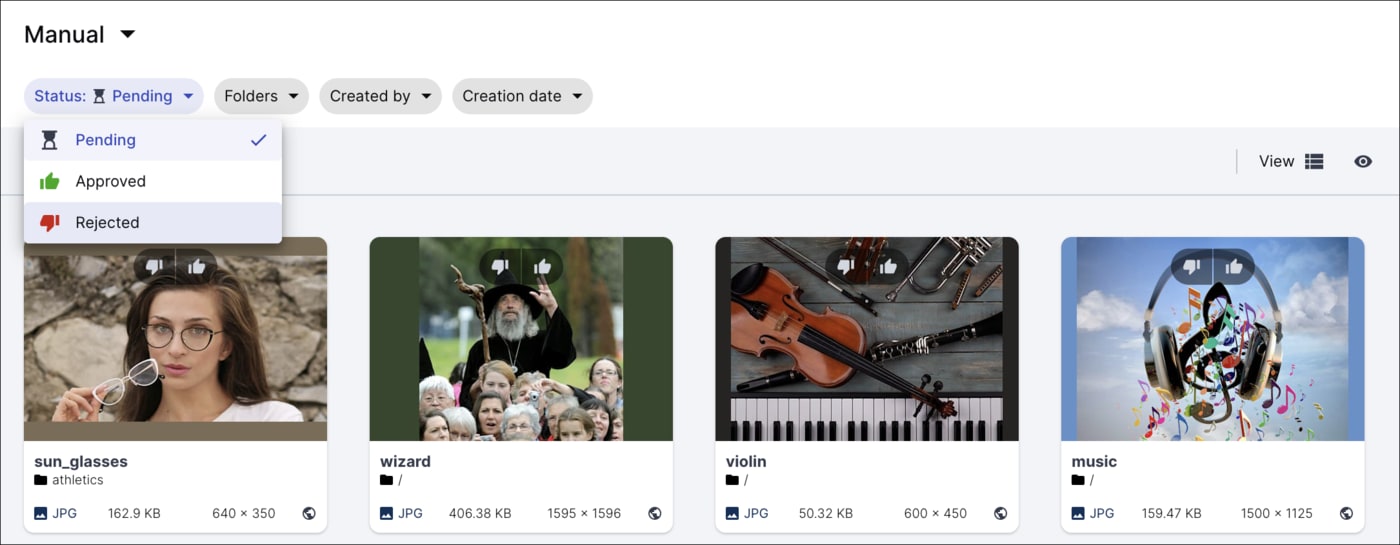

Moderating assets via the Media Library

Users with Master admin, Media Library admin, and Technical admin roles can moderate assets from the Media Library. In addition, Media Library users with the Moderate asset administrator permission can moderate assets in folders that they have Can Edit or Can Manage permissions to.

To manually review assets, select Moderation in the Media Library navigation pane. From there, you can browse moderated assets and decide to accept or reject them.

If an asset is marked for multiple moderations, you can view its moderation history to track its progress through the moderation process.

For more information, see Reviewing assets manually.

Moderating assets via the Admin API

Alternatively to using the Media Library interface, you can use Cloudinary's Admin API to manually override the moderation result. The following example uses the update API method while specifying a public ID of a moderated image and setting the moderation_status parameter to the rejected status.

Moderation status notification

You can add a notification_url parameter while uploading the asset, which instructs Cloudinary to notify your application of changes to moderation status, with the details of the moderation event (approval or rejection).

For images, you can use Cloudinary's default mechanism to display a placeholder image instead of a rejected image using the default_image parameter (d for URLs) when delivering the image. See the Using default image placeholders documentation for more details.

Evaluating and modifying upload parameters

The eval parameter allows you to modify upload parameters by specifying custom logic with JavaScript code that is evaluated when uploading a file to Cloudinary. This can be useful for conditionally adding tags, contextual metadata and structured metadata depending on specific criteria of the uploaded file.

The eval parameter accepts a string containing the JavaScript code to be evaluated. There are two variables that can be used within the context of the JavaScript code snippet as follows:

-

resource_info- to reference the resource info as it would be received in an upload response. For example,resource_info.widthreturns the width of the uploaded resource.

The currently supported list of queryable resource info fields includes:accessibility_analysis1,asset_folder2,audio_bit_rate,audio_codec,audio_codec_tag,audio_duration,audio_frequency,audio_profile,audio_start_time,bit_rate,bytes,channel_layout,channels,cinemagraph_analysis,compatible,colors1,coordinates,display_name2,duration,etag,exif,faces,filename,format,format_duration,grayscale,has_alpha,has_audio,height,ignore_loop,illustration_score,media_metadata1,nb_audio_pckts,pages,phash1,phash_mh,predominant,public_id,quality_analysis1,quality_score,semi_transparent,start_time,widthFootnotes- Available when also requesting Semantic data extraction and/or Accessability analysis.

- Available on product environments using dynamic folder mode.

-

upload_options- to assign amended upload parameters as they would be specified in an upload request. For exampleupload_options.tags = "new_tag".

The following upload options can NOT be amended:eager,eager_async,upload_preset,resource_type, andtype. - If using the

evalparameter in an upload preset and you also want to set theunique_filenameparameter to be false, you need to explicitly set it as false in theeval, and not as a separate parameter in the preset (e.g.,upload_options['unique_filename']=false). - If using the

evalparameter and you also want the upload response to include face coordinates (by addingfaces=true), you need to explicitly set the parameter to true in theeval(upload_options['faces'] = true).

For example, to add a tag of 'blurry' to any image uploaded with a quality analysis focus of less than 0.5:

On Success update script

The on_success parameter allows you to update an asset using custom JavaScript that is executed after the upload to Cloudinary is completed successfully. This can be useful for adding tags, contextual metadata and structured metadata, depending on the results of using the detection and categorization add-ons, which are only available after the file has already been successfully uploaded.

The on_success parameter accepts a string containing the JavaScript code to be executed. There are two variables that can be used within the context of the JavaScript code snippet as follows:

-

eventore- an object that encapsulates all the incoming data as follows:-

upload_info- an object with all the resource info as it would be received in an upload response. For example,e.upload_info?.widthreturns the width of the uploaded resource. -

status- either 'success' or 'failure'

-

-

current_asset- an object that references the asset and currently holds a single method:-

update- the method to update that receives a hash of the data to update (data is replaced as a result of the update, not added to). The currently supported data fields include:tags,context, andmetadata

-

For example, to upload an asset and update its contextual metadata (context) with the caption returned from the Cloudinary AI Content Analysis add-on, and add the tag 'autocaption' (current_asset.update({tags: ['autocaption'], context: {caption: e.upload_info?.info?.detection?.captioning?.data?.caption}})):

Computer vision demo

Here's a small taste of what you can create with Cloudinary's vast analysis capabilities. The possibilities are endless!

In this demo, you'll be asked to upload up to 3 images. Cloudinary will analyze those assets and return the images automatically transformed accordingly, along with a description.

This code is also available in GitHub

Programmable Media

Programmable Media

Digital Asset Management

Digital Asset Management